Ant-Man & The Wasp: Quantumania

Virtual Production Pipeline Engineering for VR scouting

Client: Marvel Studios / Happy Mushroom

Production: Ant-Man & The Wasp: Quantumania

Project Duration: January 2021 – August 2021

In a sentence

Providing Pipeline Engineering support for the Virtual Art Department, we built custom Unreal Engine plugins, managed Perforce version control, and coordinated international multi-user VR scouting sessions that enabled Marvel's key creatives to collaboratively scout quantum realm sets during COVID lockdowns.

We're proud to have been part of the Virtual Art Department [VAD] / Virtual Production crew at Happy Mushroom (now Narwhal Studios) working on the virtual sets for this film.

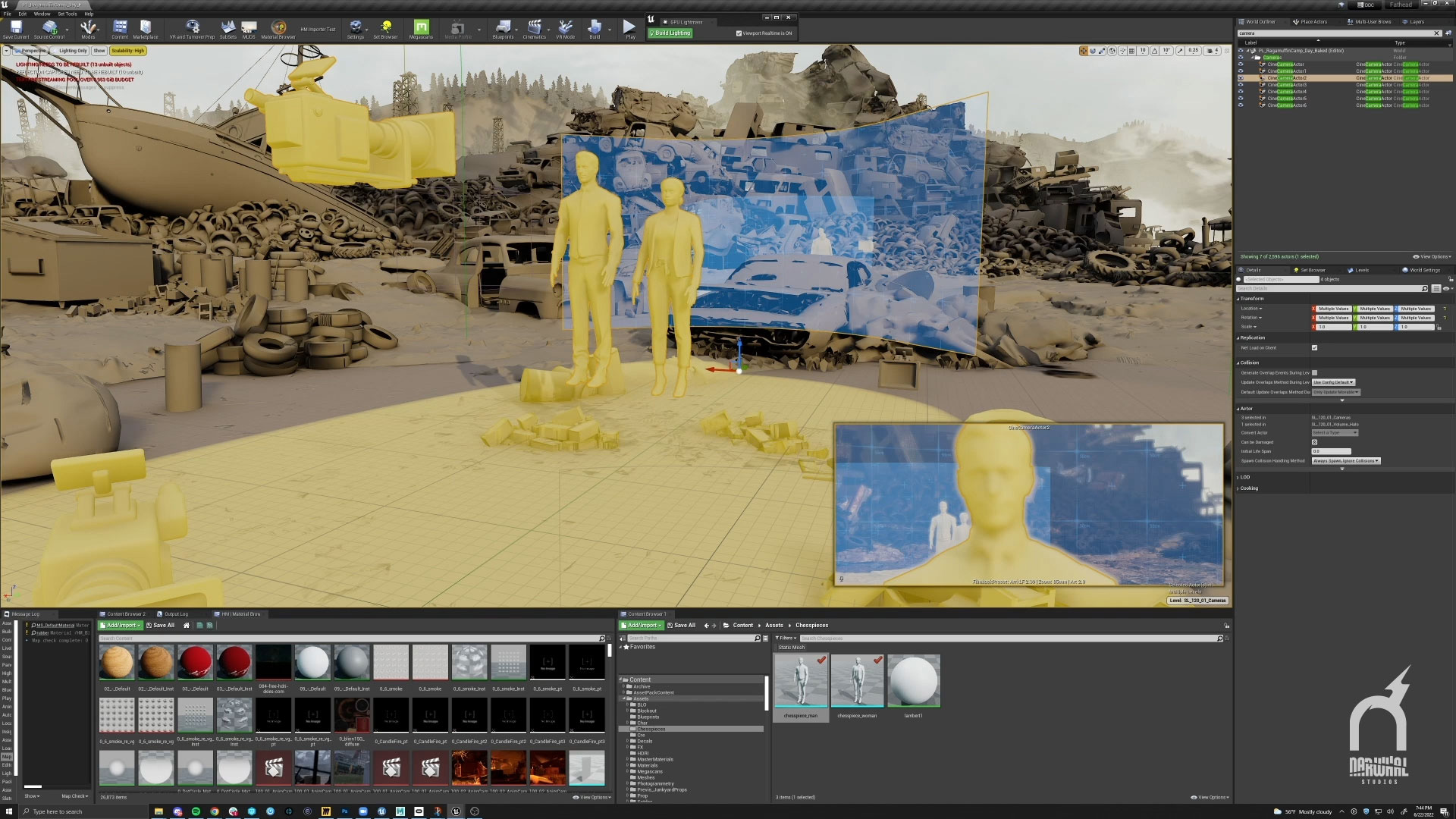

A significant portion of 2021 was devoted to enabling key creatives – Director, Director of Photography, VFX Supervisor, Art Department leads – to get together and scout sets in collaborative, multi-user VR sessions. This was especially important given the production started during COVID lockdowns, allowing them to continue the planning process by meeting on virtual sets.

The image above is from a VR scouting session in action. Please note: the images are courtesy of Narwhal Studios and represent some of the process but are not from this particular production. Check out their courses about world building in Unreal.

What is VR scouting?

Virtual scouting provides filmmakers with a VR platform to explore digital environments from any angle. These tools enable directors and DOPs to find locations, compose shots, and establish scene dynamics with accurate site representations. Meanwhile, artists and set designers can build and adjust settings in VR, using tools to verify distances and modify scenes. Images from the VR world can be captured for team reference.

The challenge

The production needed a robust VR scouting pipeline that would:

- Enable international collaboration across Hawaii, LA, and London time zones

- Handle complex quantum realm environments optimised for VR performance

- Support multiple simultaneous users scouting sets in real-time

- Capture session data (camera placements, notes, lighting changes) for direct handoff to ILM

- Work reliably for directors and key creatives, many new to VR

- Maintain version control across rapidly evolving virtual sets

The international multi-user aspect presented particular latency challenges – keeping VR sessions synchronised and responsive across such geographic distances required careful infrastructure planning.

What we did

Providing Pipeline Engineering support for the Virtual Art Department, we handled the technical infrastructure that enabled VR scouting at scale.

- Custom Unreal Engine plugin development – designed and implemented new tools and features for Virtual Production workflows, including custom engine features to support production needs

- Pipeline and version control – managed asset pipeline and Perforce version control, working closely with Technical Artists to ensure sets were optimised and VR-ready

- International multi-user server management – coordinated VR scouting sessions across Hawaii–LA–London, managing infrastructure and addressing latency challenges

- Session management and data capture – managed live VR sessions, captured session data (camera positions, notes, scene changes, lighting adjustments), and packaged updates so ILM could access them directly

- VR scene optimisation – optimised virtual scenes and sets for VR performance

- UX and accessibility improvements – enhanced the user experience and workflow of VR Virtual Production utilities

- VR tool inductions – delivered training for directors and key creatives including Bill Pope and Sam Esmail, ensuring technology remained as invisible and accessible as possible

During VR scouts, the role involved managing the live session – handling any issues key creatives encountered with VR equipment, ensuring smooth operation, and making certain all session data was properly saved and processed for the downstream pipeline.

Not for the first time (see also Jurassic World VR Expedition and other projects), this involved working closely with a large team across several time zones, coordinating complex real-time collaboration.

In short: enabling artists to get on with their job and making the technology as invisible and accessible as possible.

From VR scout to StageCraft volume

Once sets were scouted, we would capture a snapshot of the session – with all notes, scene changes, lighting adjustments and placed cameras – and publish it into Perforce for ILM to access directly. From there, ILM would take over the downstream work: refining, optimising and re-authoring what was needed to make the environments StageCraft “volume” ready (the same LED screen setup that The Mandalorian was made with. In fact, some of the Narwhal Studios artists had just come over to this project from working on the Star Wars series 🙌).

As with the sets of the film itself, watching the creative process when they got into full flow was all mindblowing and very surreal! A glimpse into the future.

The result

There was a long wait until the film was released in 2023 (with principal photography following our work in 2021, then a lengthy post-production process throughout 2022). Seeing the final shots look so close to what we saw planned in the scouting sessions – enabled through the Narwhal tools and processes we worked on – is a significant testament to the work of the Virtual Art Department. As a team, we really made a difference.

You can see the cast and crew on IMDB. An amazing team!

Here's Narwhal's case study video, showing the sets as planned and how it all turned out in the final shots:

Narwhal Studios case study

Official Trailer 1

Official Trailer 2

What came next

This work was followed by further ambitious virtual production projects for major US productions, later cancelled and remaining under NDA. As is common in this space, not all work reaches the public domain.

Broader context

This work sits alongside other real-time and GPU-accelerated projects we've delivered – from custom iPad painting tools with ultra-high-resolution export (including David Hockney’s tooling since 2018), to NVIDIA AI SDK integrations for non-headset mixed-reality spectator cameras at live events, and WebRTC video streaming from embedded Jetson devices. The common thread is production-grade engineering for demanding real-time systems.